Key takeaways from ESA Living Planet Symposium 2025

Dr Jędrzej Bojanowski, Director of EO Data Science and Products

Attending the ESA Living Planet Symposium 2025 in Vienna was a truly enlightening experience. The scale of the event was impressive - over 7300 people registered (with 6800 attending in person) from 119 countries, showing just how global and dynamic the Earth observation community has become. With more than 250 sessions, 60 agoras, and over 4200 scientific presentations and posters, there was a real sense of momentum, innovation, and diversity throughout the venue. CloudFerro played an active role at this year’s event, contributing to over 30 activities — including delivering presentations during thematic sessions, leading and moderating discussions, participating in panels, conducting tutorials, and presenting posters. Particularly meaningful for us were the interactions with users of initiatives we co-create, such as the CDSE User Review Meeting and the Destination Earth User Exchange Forum. We were present at three booths — CloudFerro, CDSE, and ESA — where we held hundreds of valuable conversations with attendees.

Below are some important insights and conclusions we have drawn from the event and how our endeavours fit into these changes and achievements of the EO sector.

Satellite launch accessibility

Launching satellites is becoming increasingly easier and more affordable. The primary challenge now lies in selecting the right payload to generate valuable insights for both scientific research and business applications. Ground segment providers are adapting to meet this growing demand, with companies like CloudFerro delivering a comprehensive value chain—from data ingestion to a cloud-based storage to advanced processing and seamless dissemination to end users.

Data standards

The topic of data standards, particularly the Spatiotemporal Asset Catalog (STAC), was significantly addressed. Although STAC is widely adopted, challenges remain, such as inconsistencies in validation across different tools and the need for more complex, nested data collections. Nonetheless, our provision of STAC for users in the Copernicus Data Space Ecosystem has received positive feedback.

Shift to cloud-native formats

The transition from traditional data formats like SAFE/JPEG2000 to cloud-native formats such as Zarr is underway. Developments in Zarr v3 and geoZarr are aiming to address issues related to managing numerous small files and enhance functionalities like zoom levels for varying dimensions. However, questions about optimal chunking strategies and metadata presence in individual files persist, along with concerns regarding ensuring data continuity as Copernicus transitions to these new formats.

AI and Machine Learning trends

The integration of AI and machine learning was a prominent theme. Google’s introduction of global EO embeddings—64-dimensional vectors corresponding to each pixel per year—was regarded as a notable advancement. At the same time, CloudFerro had already released its own global embeddings, which were positively received. The potential of these tools and new data formats could transform the utilization of Earth Observation data, despite some initial results lacking visual intuitiveness. There is an expressed need for higher spatiotemporal resolution, with hopes that AI can bridge data gaps and enhance data fusion processes.

And, of course, the European headache – safety, resilience and sustainability kept recurring in various contexts. At CloudFerro we are looking forward to contributing to the ERS (European Resilience from Space), and in the future to the EOGS (Earth Observation Governmental Service) with our insights and our sovereign cloud services.

Community engagement and data federation

There is a growing emphasis on data federation and community collaboration. Users are increasingly interested in accessing multiple data sources through a singular API and login, driving a movement toward more open, collaborative environments. The Earth Observation Exploitation Platform Common Architecture (EOEPCA) is anticipated to set the standard for federated services, a direction also reflected in CloudFerro’s strategic roadmap.

Cloud processing as standard

Cloud processing has established itself as a standard practice in the Earth Observation sector, with CloudFerro gaining recognition during the conference for its contributions in this area. While we may have started as a niche provider, platforms like CREODIAS are now becoming more widely known and trusted. Positive feedback has been noted, especially concerning how our services assist in handling large volumes of satellite data effectively, with an emphasis on the importance of adhering to open standards. Being part of this community continues to inspire us to keep improving and supporting our users even more.

We appreciate the engaging discussions at the CloudFerro, CDSE, and ESA booths, as well as the valuable feedback received on our presentations, sessions, and posters. Stay tuned for further developments as we continue to evolve and enhance our support for the Earth Observation community.

Running private LLM on CloudFerro Virtual machine

by Mateusz Ślaski, Sales Support Engineer

Introduction

Running a Large Language Model (LLM) on your own virtual machine with a high-performance GPU offers several advantages:

- Privacy and Security: You maintain control over your data, reducing the risk of exposure associated with third-party platforms.

- Performance Optimization: You can optimize and configure your environment specifically for your workload, potentially achieving lower latency and faster processing times.

- Customization: You have the flexibility to adjust system resources and settings to tailor the performance and capabilities of the LLM to your specific needs.

- Cost Efficiency: By controlling the computing resources, you can manage costs more effectively, especially if you have fluctuating demands or take advantage of SPOT instances. Additionally VM with LLM shared thru API between team members will replace need of equiping them with local GPU able to run LLM.

- Scalability: You can scale your resources up or down based on demand, allowing you to handle varying workloads efficiently.

- Reduced Dependency: Operating your LLM reduces reliance on third-party infrastructure (in this case you would be dependent only on independent Cloud Provider operating in Europe under EU law), giving you greater independence and control over its operation and maintenance.

- Access to Advanced Features: Cloud operator is able to provide high-performance GPU difficult to purchase by smaller companies, you can test and leverage advanced features and capabilities of LLMs that require significant computational power.

- Continuous Availability: You achieve high availability and reliability, as the virtual machine can be configured to meet uptime requirements without interruptions often associated with shared platforms.

What will you learn from this document?

- You will learn how to run a private Large Language Model (LLM) on a CloudFerro virtual machine using the self hosted Ollama platform.

- You will start by creating a VM on the CREODIAS platform by selecting the appropriate GPU and AI-related options

- Once you set up SSH access, you will verify the GPU visibility to ensure the NVIDIA drivers load correctly.

- You will then proceed with the Ollama installation and verify its ability to recognize the GPU.

- Furthermore, you will be guided on downloading and testing small LLM models from the Ollama Library.

- Next you get details on advanced configurations, including how to expose the Ollama API for network access and set up a reverse proxy with SSL certificates and Basic Authentication for added security.

- Additionally, you will address potential security considerations when you expose the API, either within a cloud tenant or publicly.

Manual procedure

VM creation

To create the VM, please follow this document:

How to create a new Linux VM in OpenStack Dashboard Horizon on CREODIAS

Please note that whaen making the two steps you must choose the GPU and AI related options.

1. When a source image is selected, please use one of the *_NVIDIA_AI images (two Ubuntu and one CentOS are available).

2. An instance must be created with one of the following flavors:

(as available at the end of March 2025)

- WAW3-1

- vm.a6000.1 (1/8 of shared A6000 card)

- vm.a6000.2 (1/4 of shared A6000 card)

- vm.a6000.4 (1/2 of shared A6000 card)

- vm.a6000.8 (full shared A6000 card)

- WAW3-2

Standard instances- gpu.h100 (One H100 card available)

- gpu.h100x2 (Two H100 cards available)

- gpu.h100x4 (Four H100 cards available)

- gpu.l40sx2 (Two L40s cards available)

- gpu.l40sx8 (Eight L40s cards available)

- vm.l40s.1 (1/8 of shared L40s card)

- vm.l40s.2 (1/4 of shared L40s card)

- vm.l40s.4 (1/2 of shared L40s card)

- vm.l40s.8 (full shared L40s card)

Spot instances - spot.vm.l40s.1 (1/8 of shared L40s card)

- spot.vm.l40s.2 (1/4 of shared L40s card)

- spot.vm.l40s.4 (1/2 of shared L40s card)

- spot.vm.l40s.8 (full shared L40s card)

- FRA1-2

- vm.l40s.2 (1/4 of shared L40s card)

- vm.l40s.8 (full shared L40s card)

- WAW4-1

- A new GPU flavors for H100 and L40s NVIDIA GPUs will be available soon (~ end of April 2025).

This tutorial was prepared using "vm.a6000.8" flavor and "Ubuntu 22.04 NVIDIA_AI" image on WAW3-1 region.

Accessing VM with SSH

To configure just created instance, we will access it using SSH.

Depending on the operating system that you use on your local computer, choose one of the below documents:

GPU check

The first step is checking if the GPU is visible by the system and if NVIDIA drivers are loaded properly.

You should be able to run the command:

nvidia-smiAnd you should get the following output:

Fri Mar 21 17:28:32 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.161.07 Driver Version: 535.161.07 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA RTXA6000-48Q On | 00000000:00:05.0 Off | 0 |

| N/A N/A P8 N/A / N/A | 0MiB / 49152MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| No running processes found |

+---------------------------------------------------------------------------------------+ Please note that GPU memory usage is 0MiB of the amount available per selected flavor because it is not used yet.

Ollama installation

According to the official instruction at [Ollama dowload page for Linux](https://ollama.com/download/linux) it is enough to run a single installation script:

curl -fsSL https://ollama.com/install.sh | sh -->You should see the following output with the last message stating that Ollama sees GPU.

>>> Installing ollama to /usr/local

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service.

>>> NVIDIA GPU installed.Please note that this installation script not only downloads and installs packages, but additionally runs Ollama web service locally.

If you execute the command:

systemctl status ollamayou will get this output:

● ollama.service - Ollama Service

Loaded: loaded (/etc/systemd/system/ollama.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2025-03-21 19:35:50 UTC; 2 days ago

Main PID: 110150 (ollama)

Tasks: 22 (limit: 135297)

Memory: 1.7G

CPU: 33.690s

CGroup: /system.slice/ollama.service

└─110150 /usr/local/bin/ollama serve

Mar 21 20:57:45 llm-tests ollama[110150]: llama_init_from_model: graph splits = 2

Mar 21 20:57:45 llm-tests ollama[110150]: key clip.use_silu not found in file

Mar 21 20:57:45 llm-tests ollama[110150]: key clip.vision.image_grid_pinpoints not found in file

Mar 21 20:57:45 llm-tests ollama[110150]: key clip.vision.feature_layer not found in file

Mar 21 20:57:45 llm-tests ollama[110150]: key clip.vision.mm_patch_merge_type not found in file

Mar 21 20:57:45 llm-tests ollama[110150]: key clip.vision.image_crop_resolution not found in file

Mar 21 20:57:45 llm-tests ollama[110150]: time=2025-03-21T20:57:45.432Z level=INFO source=server.go:619 msg="llama runner started in 1.01 seconds"

Mar 21 20:57:46 llm-tests ollama[110150]: [GIN] 2025/03/21 - 20:57:46 | 200 | 2.032983756s | 127.0.0.1 | POST "/api/generate"

Mar 23 19:36:29 llm-tests ollama[110150]: [GIN] 2025/03/23 - 19:36:29 | 200 | 59.41µs | 127.0.0.1 | HEAD "/"

Mar 23 19:36:29 llm-tests ollama[110150]: [GIN] 2025/03/23 - 19:36:29 | 200 | 538.938µs | 127.0.0.1 | GET "/api/tags"To test out Ollama installation, we will download 2 small models from Ollama Library.

ollama pull llama3.2:1b

ollama pull moondreamEach of them should give a similar output:

pulling manifest

pulling 74701a8c35f6... 100% ▕█████████████████████████████████████▏ 1.3 GB

pulling 966de95ca8a6... 100% ▕█████████████████████████████████████▏ 1.4 KB

pulling fcc5a6bec9da... 100% ▕█████████████████████████████████████▏ 7.7 KB

pulling a70ff7e570d9... 100% ▕█████████████████████████████████████▏ 6.0 KB

pulling 4f659a1e86d7... 100% ▕█████████████████████████████████████▏ 485 B

verifying sha256 digest

writing manifest

successVerify that they are visible:

ollama listYou should see them on the list

NAME ID SIZE MODIFIED

moondream:latest 55fc3abd3867 1.7 GB 47 hours ago

llama3.2:1b baf6a787fdff 1.3 GB 2 days agoPlease test them by executing one or both commands below.

Remember that to exit the chat ,you need to use /bye command.

ollama run moondreamOr

ollama run llama3.2:1bNow, if you execute:

nvidia-smiCommand.

Then you should have a similar output:

Fri Mar 21 20:58:40 2025

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.161.07 Driver Version: 535.161.07 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA RTXA6000-48Q On | 00000000:00:05.0 Off | 0 |

| N/A N/A P8 N/A / N/A | 6497MiB / 49152MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 1514 C /usr/local/bin/ollama 4099MiB |

| 0 N/A N/A 1568 C /usr/local/bin/ollama 2395MiB |

+---------------------------------------------------------------------------------------+It shows Ollama processes on the list and memory consumption being sum of loaded models.

As mentioned before, the Linux service with Ollama API server should already run in the background.

You may test it with the following Curl request:

curl http://localhost:11434/api/generate -d '{

"model": "moondream",

"prompt": "Why milk is white?"

}'You will receive a bunch of json response messages containing a model answer

{"model":"moondream","created_at":"2025-03-23T19:50:31.694190903Z","response":"\n","done":false}

{"model":"moondream","created_at":"2025-03-23T19:50:31.701052938Z","response":"Mil","done":false}

{"model":"moondream","created_at":"2025-03-23T19:50:31.704855264Z","response":"k","done":false}

{"model":"moondream","created_at":"2025-03-23T19:50:31.70867345Z","response":" is","done":false}

{"model":"moondream","created_at":"2025-03-23T19:50:31.712496186Z","response":" white","done":false}

{"model":"moondream","created_at":"2025-03-23T19:50:31.716349912Z","response":" because","done":false}

...Bigger size models

For now to make this tutorial fluent, we used small models with the size of about 1 GB.

If we have a GPU with more memory we may do test using bigger model. Let's try Llama3.3 with size of 42GB.

When you type name of model in search box on Ollama Libray then you get a list of models with this text in name. Copy model tag and use it locally.

You may activate the download of the model and then run it by a single command.

ollama run llama3.3:latestOr only download the model for further usage:

ollama pull llama3.3:latestTag "llama3.3:latest" should be also used in Curl query when communicating with API.

Additional setup if necessary

If you execute command

ollama serve --helpYou will see a list of environment variables allowing to tune configuration according to your requirements and the hardware used.

In the next section we will set up one of them.

Start ollama

Usage:

ollama serve [flags]

Aliases:

serve, start

Flags:

-h, --help help for serve

Environment Variables:

OLLAMA_DEBUG Show additional debug information (e.g. OLLAMA_DEBUG=1)

OLLAMA_HOST IP Address for the ollama server (default 127.0.0.1:11434)

OLLAMA_KEEP_ALIVE The duration that models stay loaded in memory (default "5m")

OLLAMA_MAX_LOADED_MODELS Maximum number of loaded models per GPU

OLLAMA_MAX_QUEUE Maximum number of queued requests

OLLAMA_MODELS The path to the models directory

OLLAMA_NUM_PARALLEL Maximum number of parallel requests

OLLAMA_NOPRUNE Do not prune model blobs on startup

OLLAMA_ORIGINS A comma separated list of allowed origins

OLLAMA_SCHED_SPREAD Always schedule model across all GPUs

OLLAMA_FLASH_ATTENTION Enabled flash attention

OLLAMA_KV_CACHE_TYPE Quantization type for the K/V cache (default: f16)

OLLAMA_LLM_LIBRARY Set LLM library to bypass autodetection

OLLAMA_GPU_OVERHEAD Reserve a portion of VRAM per GPU (bytes)

OLLAMA_LOAD_TIMEOUT How long to allow model loads to stall before giving up (default "5m")Exposing Ollama API for other hosts in network - Internal use

Edit the file with Ollama service configuration (if necessary replace vim with your editor of choice).

sudo vim /etc/systemd/system/ollama.serviceBy default Ollama is exposed on localhost and port 11434, so it can not be accessed from other hosts in the project. To change the default behavior we add a line, setting Ollama to expose API on All interfaces and lower range port. For this article, we choose port 8765.

Environment="OLLAMA_HOST=0.0.0.0:8765"In [service] section.

So updated File would look like this:

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="OLLAMA_HOST=0.0.0.0:8765"

Environment="PATH=/opt/miniconda3/condabin:/usr/local/cuda/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin"

[Install]

WantedBy=default.targetAfter this change we have to update the services.

sudo systemctl daemon-reloadsystemctl restart ollama.serviceAnd check if it is running properly.

systemctl status ollama.serviceIf we go now to another VM in the same network and execute a similar Curl request - modified only by changing IP address and port.

curl http://LLM_TEST_VM_IP:8765/api/generate -d '{

"model": "moondream",

"prompt": "Why milk is white?"

}'Important remark:

If we expose API directly in this way in other port, then command ollama wouldn't work. The message will be:

Error: could not connect to ollama app, is it running?It is because the command uses the same API and tries to access it on the default port 11434.

We have to execute the command:

export OLLAMA_HOST=0.0.0.0:8765Or even add it to ~/.bashrc file to make the change permanent.

API security

You have to consider one important thing. Now Ollama API is exposed not only to a single network but also to all hosts in other networks in your project.

If this is not acceptable, you should consider some security settings.

- The first is to create a separate external router and network according to this document:

How to create a network with router in Horizon Dashboard on CREODIAS

API still will be exposed but only inside of a single network. - If it is still not acceptable, then use guidelines from the next chapter.

Exposing Ollama API

In this case we will leave default API sttings for localhost and port 11434. Instead we add reverse proxy that expose API on other port and eventually add some authorization.

sudo apt install nginx

sudo apt install apache2-utilsSet Basic Authentication password. You must retype the password twice.

cd /etc

sudo htpasswd -c .htpasswd ollamaExposing in cloud tenant

Simple NGINX configuration with basic auth but http only.

For internal usage only!

Strongly not recommending using when exposing API in public Internet.

user www-data;

worker_processes auto;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

worker_connections 768;

}

http {

server {

listen 8765;

# Basic authentication setup

auth_basic "Restricted Area";

auth_basic_user_file /etc/.htpasswd; # File containing usernames and hashed passwords

location / {

proxy_pass http://127.0.0.1:11434;

}

}

}Test Curl request:

curl -u "ollama:YOUR_PASSWORD" http://10.0.0.148:8765/api/generate -d '{

"model": "llama3.3:latest",

"prompt": "Who is Peter Watts?"

}'Exposing API with encryption

Assign public IP to your machine with Ollama using this guide:

How to Add or Remove Floating IP’s to your VM on CREODIAS

Obtain SSL certificate for this IP or domain name and put it in two files on VM:

/etc/ssl/certs/YOUR_CERT_NAME.crt/etc/ssl/private/YOUR_CERT_NAME.key

Or generate a self-signed certificate.

It would be enough for personal or small team usage, but not if you want to expose API for customers or business partners.

sudo openssl req -x509 -nodes -days 365 -newkey rsa:4096 -keyout /etc/ssl/private/YOUR_CERT_NAME.key -out /etc/ssl/certs/YOUR_CERT_NAME.crt -subj "/C=PL/ST=Mazowieckie/L=Warsaw/O=CloudFerro/OU=Tech/CN=OllamaTest"Simple NGINX config with basic auth and https.

user www-data;

worker_processes auto;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

worker_connections 768;

# multi_accept on;

}

http {

server {

listen 8765 ssl;

server_name testing-ollama;

# Path to SSL certificates

ssl_certificate /etc/ssl/certs/YOUR_CERT_NAME.crt;

ssl_certificate_key /etc/ssl/private/YOUR_CERT_NAME.key;

# Basic authentication setup

auth_basic "Restricted Area";

auth_basic_user_file /etc/.htpasswd; # File containing usernames and hashed passwords

location / {

proxy_pass http://127.0.0.1:11434;

}

}

}Curl test request.

With accepting self signed certificate by -k option:

curl -k -u "ollama:YOUR_PASSWORD" https://YOUR_IP_OR_DOMAIN:8765/api/generate -d '{

"model": "llama3.3:latest",

"prompt": "Who is Peter Watts?"

}'Automated workflow with Terraform

Prerequisites / Preparation

Before you start, please read the documents:

- "Generating and Authorizing Terraform using a Keycloak User on CREODIAS" https://creodias.docs.cloudferro.com/en/latest/openstackdev/Generating-and-authorizing-Terraform-using-Keycloak-user-on-Creodias.html

- "How to Generate or Use Application Credentials via CLI on CREODIAS": https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-generate-or-use-Application-Credentials-via-CLI-on-Creodias.html We will use them to authenticate the Terraform OpenStack provider.

- Additionally, you may review:

- Official Terraform documentation: https://developer.hashicorp.com/terraform

- Terraform OpenStack Provider documentation: https://registry.terraform.io/providers/terraform-provider-openstack/openstack/latest/docs

If necessary, you may also refresh some details about the manual management of: projects, key-pairs, networks, and security groups:

- https://creodias.docs.cloudferro.com/en/latest/networking/Generating-a-SSH-keypair-in-Linux-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-create-key-pair-in-OpenStack-Dashboard-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/networking/How-to-Import-SSH-Public-Key-to-OpenStack-Horizon-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-use-Security-Groups-in-Horizon-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/networking/How-to-create-a-network-with-router-in-Horizon-Dashboard-on-Creodias.html

Step 1 - Select or Create a Project

You may use the default project in your tenant (usually named "cloud_aaaaa_bb") or create a new one by following the document mentioned below. https://creodias.docs.cloudferro.com/en/latest/openstackcli/How-To-Create-and-Configure-New-Project-on-Creodias-Cloud.html

Step 2 - Install Terraform

There are various ways to install Terraform, some of them are described in the documentation mentioned in the "Preparation" chapter.

If you are using Ubuntu 22.04 LTS or newer and you do not need the latest Terraform release (for the Terraform OpenStack provider, it is not necessary), the easiest way is to use Snap.

First, install Snap:

sudo apt install snapdThen install Terraform:

sudo snap install terraform --classicStep 3 - Allowing Access to Project from Terraform

Now create Application Credentials.

Please follow the document: "How to Generate or Use Application Credentials via CLI on CREODIAS": https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-generate-or-use-Application-Credentials-via-CLI-on-Creodias.html

When you have them ready, save them in a secure location (i.e., password manager) and fill in the variables in the "llm_vm.tfvars" file.

Step 4 - Prepare Configuration Files

As Terraform operates on the entire directory and automatically merges all "*.tf" files into one codebase, we may split our Terraform code into a few files to manage the code more easily.

- main.tf

- variables.tf

- resources.tf

- locals.tf

Additionally, we need two other files:

- llm_vm_user_data.yaml

- llm_api_nginx.conf

- llm_vm.tfvars

File 1 - main.tf

In this file, we keep the main definitions for Terraform and the OpenStack provider.

terraform {

required_version = ">= 0.14.0"

required_providers {

openstack = {

source = "terraform-provider-openstack/openstack"

version = "~> 1.51.1"

}

}

}

provider "openstack" {

auth_url = var.auth_url

region = var.region

user_name = "${var.os_user_name}"

application_credential_id = "${var.os_application_credential_id}"

application_credential_secret = "${var.os_application_credential_secret}"

}File 2 - variables.tf

In this file, we will keep variable definitions.

variable os_user_name {

type = string

}

variable tenant_project_name {

type = string

}

variable os_application_credential_id {

type = string

}

variable os_application_credential_secret {

type = string

}

variable auth_url {

type = string

default = "https://keystone.cloudferro.com:5000"

}

variable region {

type = string

validation {

condition = contains(["WAW3-1", "WAW3-2", "FRA1", "FRA1-2", "WAW4-1"], var.region)

error_message = "Proper region names are: WAW3-1, WAW3-2, FRA1, FRA1-2, WAW4-1"

}

}

#Our friendly name for entire environment.

variable env_id {

type = string

}

# Key-pair created in previous steps

variable env_keypair {

type = string

}

variable internal_network {

type = string

default = "192.168.12.0"

validation {

condition = can(regex("^(10\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])|192\\.168\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9]))$", var.internal_network))

error_message = "Provide proper network address for class 10.a.b.c or 192.168.a.b"

}

}

variable internal_netmask {

type = string

default = "/24"

validation {

condition = can(regex("^\\/(1[6-9]|2[0-4])$", var.internal_netmask))

error_message = "Please use mask size from /16 to /24."

}

}

variable external_network {

type = string

default = "10.8.0.0"

validation {

condition = can(regex("^(10\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])|192\\.168\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9]))$", var.external_network))

error_message = "Provide proper network address for class 10.a.b.c or 192.168.a.b"

}

}

variable llm_image {

type = string

default = "Ubuntu 22.04 NVIDIA_AI"

}

variable llm_flavor {

type = string

}

variable llm_api_port {

type = number

default = 8765

}

variable llm_tag {

type = string

}

variable cert_data {

type = string

default = "/C=colar_system/ST=earth/L=europe/O=good_people/OU=smart_people/CN=OllamaTest"

}File 3 - resources.tf

This is the most significant file where definitions of all entities and resources are stored.

resource "random_password" "ollama_api_pass" {

length = 24

special = true

min_upper = 8

min_lower = 8

min_numeric = 6

min_special = 2

override_special = "-"

keepers = {

tenant = var.tenant_project_name

}

}

output "ollama_api_pass_output" {

value = random_password.ollama_api_pass.result

sensitive = true

}

data "openstack_networking_network_v2" "external_network" {

name = "external"

}

resource "openstack_networking_router_v2" "external_router" {

name = "${var.env_id}-router"

external_network_id = data.openstack_networking_network_v2.external_network.id

}

resource "openstack_networking_network_v2" "env_net" {

name = "${var.env_id}-net"

}

resource "openstack_networking_subnet_v2" "env_net_subnet" {

name = "${var.env_id}-net-subnet"

network_id = openstack_networking_network_v2.env_net.id

cidr = "${var.internal_network}${var.internal_netmask}"

gateway_ip = cidrhost("${var.internal_network}${var.internal_netmask}", 1)

ip_version = 4

enable_dhcp = true

}

resource "openstack_networking_router_interface_v2" "router_interface_external" {

router_id = openstack_networking_router_v2.external_router.id

subnet_id = openstack_networking_subnet_v2.env_net_subnet.id

}

resource "openstack_networking_floatingip_v2" "llm_public_ip" {

pool = "external"

}

resource "openstack_networking_secgroup_v2" "sg_llm_api" {

name = "${var.env_id}-sg-llm-api"

description = "Ollama API"

}

resource "openstack_networking_secgroup_rule_v2" "sg_llm_api_rule_1" {

direction = "ingress"

ethertype = "IPv4"

protocol = "tcp"

port_range_min = var.llm_api_port

port_range_max = var.llm_api_port

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.sg_llm_api.id

}

resource "openstack_compute_instance_v2" "llm_server" {

name = "${var.env_id}-server"

image_name = var.llm_image

flavor_name = var.llm_flavor

security_groups = [

"default",

"allow_ping_ssh_icmp_rdp",

openstack_networking_secgroup_v2.sg_llm_api.name

]

key_pair = var.env_keypair

depends_on = [

openstack_networking_subnet_v2.env_net_subnet

]

user_data = local.llm_vm_user_data

network {

uuid = openstack_networking_network_v2.env_net.id

fixed_ip_v4 = cidrhost("${var.internal_network}${var.internal_netmask}", 3)

}

}

resource "openstack_compute_floatingip_associate_v2" "llm_ip_associate" {

floating_ip = openstack_networking_floatingip_v2.llm_public_ip.address

instance_id = openstack_compute_instance_v2.llm_server.id

}File 4 - locals.tf

In this file we keep all values recalculated from any type of input data (variables, templates ...).

locals {

nginx_config = "${templatefile("./llm_api_nginx.conf",

{

ollama_api_port = "${var.llm_api_port}"

}

)}"

llm_vm_user_data = "${templatefile("./llm_vm_user_data.yaml",

{

llm_tag = "${var.llm_tag}"

cert_data = "${var.cert_data}"

ollama_api_pass = "${random_password.ollama_api_pass.result}"

nginx_config_content = "${indent(6, local.nginx_config)}"

}

)}"

}File 5 - llm_vm_user_data.yaml

This is a template of user-data that would be injected into our instance hosting Ollama.

#cloud-config

package_update: true

package_upgrade: true

packages:

- vim

- openssh-server

- nginx

- apache2-utils

write_files:

- path: /etc/nginx/nginx.conf

permissions: '0700'

content: |

${nginx_config_content}

- path: /run/scripts/prepare_llm_vm

permissions: '0700'

defer: true

content: |

#!/bin/bash

curl -fsSL https://ollama.com/install.sh | sh

sleep 5s

systemctl enable ollama.service

systemctl start ollama.service

sleep 5s

export HOME=/root

ollama pull ${llm_tag}

sudo openssl req -x509 -nodes -days 365 -newkey rsa:4096 -keyout /etc/ssl/private/ollama_api.key -out /etc/ssl/certs/ollama_api.crt -subj "${cert_data}"

sudo htpasswd -b -c /etc/.htpasswd ollama ${ollama_api_pass}

systemctl enable nginx

systemctl start nginx

echo 'Ollama ready!' > /var/log/ollama_ready.log

runcmd:

- ["/bin/bash", "/run/scripts/prepare_llm_vm"]File 5 - llm_vm.tfvars

In this file, we will provide values for Terraform variables:

- os_user_name - Enter your username used to authenticate in CREODIAS here.

- tenant_project_name - Name of the project selected or created in step 1.

- os_application_credential_id

- os_application_credential_secret

- region - CloudFerro Cloud region name. Allowed values are: WAW3-1, WAW3-2, FRA1-2, WAW4-1.

- env_id - Name that will prefix all resources created in OpenStack.

- env_keypair - Keypair available in OpenStack. You will use it to log in via SSH to the LLM machine if this would be necessary - For example to use model directly with

ollama run MODEL_TAGcommand. - internal_network - Network class for our environment. Any of 10.a.b.c or 192.168.b.c.

- internal_netmask - Network mask. Allowed values: /24, /16.

- llm_flavor - VM flavor for our Ollama host.

- llm_image - Operating system image to be deployed on our instance.

- llm_tag - Tag from Ollama Library of model that we want automatically download during provisioning.

- cert_data - Values for self signed certificate.

Some of the included data, such as credentials, are sensitive. So if you save this in a Git repository, it is strongly recommended to add the file pattern "*.tfvars" to ".gitignore".

You may also add to this file the variable "external_network".

Do not forget to fill or update variable values in the content below.

os_user_name = "user@domain"

tenant_project_name = "cloud_aaaaa_b"

os_application_credential_id = "enter_ac_id_here"

os_application_credential_secret = "enter_ac_secret_here"

region = ""

env_id = ""

env_keypair = ""

internal_network = "192.168.1.0"

internal_netmask = "/24"

llm_flavor = "vm.a6000.8"

llm_image = "Ubuntu 22.04 NVIDIA_AI"

llm_tag="llama3.2:1b"

cert_data = "/C=PL/ST=Mazowieckie/L=Warsaw/O=CloudFerro/OU=Tech/CN=OllamaTest"Step 5 - Activate Terraform Workspace

A very useful Terraform functionality is workspaces. Using workspaces, you may manage multiple environments with the same code.

Create and enter a directory for our project by executing commands:

mkdir tf_llm

cd tf_llmTo initialize Terraform, execute:

terraform initThen, check workspaces:

terraform workspace listAs an output of the command above, you should see output like this:

* defaultAs we want to use a dedicated workspace for our environment, we must create it. To do this, please execute the command:

terraform workspace new llm_vmTerraform will create a new workspace and switch to it.

Step 6 - Validate Configuration

To ensure the prepared configuration is valid, do two things.

First, execute the command:

terraform validateThen execute Terraform plan:

terraform plan -var-file=llm_vm.tfvarsYou should get as an output a list of messages describing resources that would be created.

Step 7 - Provisioning of Resources

To provision all the resources, execute the command:

terraform apply -var-file=llm_vm.tfvarsAs with the plan command, you should get as an output a list of messages describing resources that would be created, but now finished with a question if you want to apply changes.

You must answer with the full word "yes".

You will see a sequence of messages about the status of provisioning.

Please remember that when the above sequence successfully finishes, the Ollama host is still not ready!

A script configuring the Ollama and downloading selected model is still running on the instance.

The process may take several minutes.

We recommend waiting about 5 minutes.

Step 8 - Obtaining VM IP and basic authorization password

To obtain a public IP address of the created instance, use the following command:

terraform state show openstack_networking_floatingip_v2.llm_public_ipPublic IP of host will be in field "address"

Password to authorize may be displayed by command:

terraform output -jsonPassword text will be at the key "value".

Step 9 - Testing

You may use use LLM directly after accessing the created instance with SSH.

ssh -i ENV_KEY_PAIR eouser@LLM_VM_PUBLIC_IPThen:

ollama run llama3.2:1bIf the instance is accessed from some application via API than, API Test may be done using similar Curl request as previously:

curl -k -u "ollama:GENERATED_PASSWORD" https://PUBLIC_IP:8765/api/generate -d '{

"model": "llama3.2:1b",

"prompt": "Who is Peter Watts?"

}'Step 10 - Removing resources when they are not needed

As GPU instance is more expensive we may completely remove it when it is not needed. By executing the command below you remove only the VM instance. The rest of resources would not be affected.

terraform destroy -var-file=llm_vm.tfvars -target=openstack_compute_instance_v2.llm_serverYou may recreate it simply by running:

terraform apply -var-file=llm_vm.tfvarsStep 11 - Usage

That's all! You may use the created virtual machine with GPU and LLM of your choice.

Happy prompting with your own AI 🙂

Function Calling

by Kacper Schnetzer, AI Engineer & Data Scientist

Function Calling: What is it and how to use it?

Function Calling is a technology that enables intelligent language models to execute application/system functions based on the analysis of provided context and data. This allows for building more interactive, dynamic, and practical applications. By integrating with functions created by developers, models can solve specific problems rather than just generatetext responses. An example of a model supporting Function Calling technology is Llama-3.3-70B-Instruct, which is available in the Sherlock Cloudferro service.

Do all language models allow the use of Function Calling?

Function Calling is currently becoming a standard in new language models. However, it's important to note that not all models support this technology yet.

Application example

A user of a chat on a pizzeria's website wants to order a Margherita pizza. They express their desire in the chat, providing their first name, last name, and the name of the pizza. A traditional chat system can only respond to the user in a text form ("Unfortunately, I cannot place this order for you, I am here only to help you choose a pizza."). With Function Calling, the chat system can place an order for the user before providing a response (assuming that the order placement functionality is available to the chat system and it has all the required data). Only after that will it respond in text form - "Your order has been placed."

Why should we use Function Calling?

In a traditional approach, language models are limited to responding with text. However, many applications require more complex actions, such as:

- Extracting key information from a database and processing it (e.g. displaying a list of orders placed by a user in an online store);

- Responding to user needs by calling appropriate application functions (e.g., placing an order in an online store when the user expresses such a desire in the chat);

- Communicating with external APIs when needed (e.g. retrieving weather data in response to a user's question "What will be the weather tomorrow in Kraków?").

How to use Function Calling in your own applications?

Integrating Function Calling with existing applications is exceptionally simple. The functionalities that the developer wants to make available for potential use by a language model handling, for example, a chat must have a description in the appropriate JSON structure, and the communication process with the language model must be supplemented with application function calls handling . To better understand the integration of Function Calling with an existing system, we will use the example of an online pizza ordering system.

Let's imagine a pizzeria's website that allows users to order pizza via chat, handled by a language model. Thanks to Function Calling, the language model can:

- Understand user requests such as "I would like to order two Pepperoni pizzas and one Margherita."

- Execute functions responsible for placing an order and register it in the system.

- Display a list of a user's current orders upon request by retrieving it from the database.

Function definitions

The order placement application is built using two functions. One allows to place an order (place_order), and the other enables retrieving a list of orders placed by a specific user (get_orders).

def place_order(user, products):

order = {

"user": user,

"products": products,

"status": "accepted"

}

database["orders"].append(order)

return {

"message": f"Order has been placed: {products}",

"order_id": len(database["orders"]) - 1

}

def get_orders(user):

user_orders = [o for o in database["orders"] if o["user"] == user]

if not user_orders:

return {"message": "You don't have any orders."}

return {

"orders": [

{

"order_id": idx,

"products": o["products"],

"status": o["status"]

}

for idx, o in enumerate(user_orders)

]

}Database

The application uses a database, which in this case is imitated by the following object:

database = {

"orders": [

{"user": "john_doe", "products": ["Margherita"], "status": "accepted"},

{"user": "jane_smith", "products": ["Pepperoni", "Napoletana"], "status": "accepted"}

]

}Function definitions for the model

An important step, as mentioned earlier, is to create a description of the functions made available to the model by creating an appropriate JSON structure. This allows the language model to understand which functions are available in our system, what parameters they require, and enables it to make decisions about their use.

tools = [

{

"type": "function",

"function": {

"name": "place_order",

"description": "Places a pizza order.",

"parameters": {

"type": "object",

"properties": {

"user": {

"type": "string",

"description": "First and last name of the user placing the order.",

},

"products": {

"type": "array",

"description": "Names of ordered pizzas.",

"items": {"type": "string"},

},

},

"required": ["user", "products"],

},

},

},

{

"type": "function",

"function": {

"name": "get_orders",

"description": "Returns a list of placed orders.",

"parameters": {

"type": "object",

"properties": {

"user": {

"type": "string",

"description": "First and last name of the user.",

}

},

"required": ["user"],

},

},

},

]Communication with the model

The next stage is handling communication with the language model. In response to a user's query, the model can provide a standard text response, but it can also request execution of one or more functions instead. In the latter case, instead of immediately returning a response to the user, functions selected by the model should be executed first, and then the return values from these functions should be added to the chat history. Finally, a language model response is generated on the chat, to the history of which the responses from the executed functions have been added. The entire process is broken down into more detailed fragments below.

Let's start by creating a simple script that connects to the model API using the openai library. This enables communication not only with the API provided by OpenAI but also with APIs provided by other suppliers, including Cloudferro in the Sherlock service.

import openai

client = openai.OpenAI(

base_url="https://api-sherlock.cloudferro.com/openai/v1",

api_key="XUznNjfktdvQpebkfnzLmvdaEpBQJ",

)Next, for the sake of example, let's create a list of messages in the chat history, which will contain only one message where the user expresses a desire to place a pizza order.

chat_history = [

{"role": "user", "content": "I would like to place an order for a Margherita pizza under the name Jan Nowak."}

]Now let's generate a response to this chat history. Since the user is explicitly asking to place an order in the system, the response will not be text but rather a function call instruction.

completion = client.chat.completions.create(

model="Llama-3.3-70B-Instruct",

messages=chat_history,

tools=tools,

)As a response, we receive an object containing the following particularly important data:

completion.choices[0].message.content- the text of the model's response (an empty string value if the model orders a Function Calling);completion.choices[0].message.tool_calls- a list of functions to execute (is empty in case of a standard response).

The response (completion) also contains extra information that goes beyond the scope of this article. A full description of the object returned by client.chat.completions.create can be found in the openai library documentation.

Typically, the model's response should be placed in the chat history.

chat_history.append(completion.choices[0].message)

In our case, when the user asks to place a pizza order, the model should of course use the place_order function. To verify that this is indeed happening, you should check if completion contains a list completion.choices[0].message.tool_calls. If tool_calls takes a value of None, it means the model did not request execution of any functions. Otherwise, we get a list of functions to call, which in our example looks as follows:

[

ChatCompletionMessageToolCall(

id="RpElTEjLK",

function=Function(

arguments='{"products": ["Margheritta"], "user": "Jan Nowak"}',

name="place_order",

),

type=None,

index=0,

)

]The next step is to call the functions from the list above. By default, this should happen automatically. Implementation of that is the responsibility of the developer of the system in which the Function Calling functionality is embedded. However, for the purposes of the example, we will skip this stage as it is not directly related to Function Calling but to the logic of the system's operation. Therefore, we proceed directly to calling the place_order function, which is the only one that appeared on the list in the model's response.

import json

tool_call = completion.choices[0].message.tool_calls[0]

arguments = json.loads(tool_call.function.arguments)

function_call_result = str(

place_order(user=arguments["user"], products=arguments["products"]),

)Remember that the value returned from the function should be converted to a string type, as this value will be reintroduced into the chat history, which is a list of text data.

The value returned from the place_order function: {'message': "Order has been placed: ['Margheritta']", 'order_id': 2}

The result of the function call is placed in the chat history. Note that the role field should contain the word tool, not assistant, which is only used for textual responses from the model. Additionally, the tool_call_id parameter should be included, which is a reference to the appropriate function call instruction from completion.choices[0].message.tool_calls.

chat_history.append(

{

"role": "tool",

"tool_call_id": tool_call.id,

"content": function_call_result,

}

)Finally, after enriching the chat history with the function call request and the values returned by the functions, we generate another model response.

completion = client.chat.completions.create(

model="Llama-3.3-70B-Instruct",

messages=chat_history,

tools=tools,

)The returned response:

The Margheritta pizza order has been placed under the name Jan Nowak. The order ID is 2.In this simple example, the model requested the execution of only one function. However, there may be a situation where the list of functions to execute is longer. In that case, it is necessary to call each function and place as many elements in the chat history as there were functions to call.

Example:

tool_calls = completion.choices[0].message.tool_calls

...

# here call each function

...

function_call_results = [...] # list of values returned by each function

for value, tool_call in zip(function_call_results, tool_calls):

chat_history.append(

{

"role": "tool",

"tool_call_id": tool_call.id,

"content": str(value),

}

)It is possible for a parameter of one function to be a value returned by another function. Let's assume there is a function that allows converting a user ID to their first and last name.

id_to_name = {

"0": "Jan Nowak"

}

def get_name_from_id(user_id):

return id_to_name[user_id]In the pizzeria example, if the user did not provide their first and last name, but instead their identifier, the model should first instruct the execution of one function - get_name_from_id. Only in the next step should it ask the system to execute the place_order function. Whether this actually happens depends on the quality of the model used and its reasoning abilities, so it is important to safeguard against incorrect parameters given to function calls. In case of an error during a function call, a good practice is to return a clear description to the model of why the function was not executed correctly.

Streaming

The openai library allows for communication with the language model API in two modes:

- standard mode (stream = False) - the API response contains all the generated text;

- streaming mode (stream = True) - the API response is streamed in segments (one application of this mode is dynamic displaying of the model's response in the chat, in real-time, token by token, as soon as tokens are generated).

When the response is streamed in segments, the Function Calling request is also dynamically divided into segments, in real-time during response generation.

Example: Sherlock API

chat_history = [

{

"role": "user",

"content": "I would like to place an order for a Margherita pizza under the name Jan Nowak.",

}

]

stream = client.chat.completions.create(

model="Llama-3.3-70B-Instruct", messages=chat_history, tools=tools, stream=True

)

for chunk in stream:

delta = chunk.choices[0].delta

print(delta, end="\n\n")Program output:

ChoiceDelta(content='', function_call=None, refusal=None, role='assistant', tool_calls=None)

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id='chatcmpl-tool-2e7340ad8926437199ebcfd64bcd8090', function=ChoiceDeltaToolCallFunction(arguments=None, name='place_order'), type='function')])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='{"user": "', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='Jan', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments=' Now', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='ak"', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments=', "products": ["', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='Marg', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='her', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='itta"]}', name=None), type=None)])

ChoiceDelta(content=None, function_call=None, refusal=None, role=None, tool_calls=[ChoiceDeltaToolCall(index=0, id=None, function=ChoiceDeltaToolCallFunction(arguments='', name=None), type=None)])Summary

Function Calling is becoming a standard in modern large language models (LLMs). As the examples above show, it allows to achieve an effect that was previously much more complicated to get and involved a higher error threshold. Modern LLMS models are highly effective at the Function Calling task because they have been trained exactly for this purpose on appropriate datasets.

Function calling increases the possibilities of integrating language models with existing systems and enables the use of these systems in the most natural way - by using the language that humans communicate with. Thanks to these tools, applications will become even easier to use. Language models will become intelligent intermediaries between the user and the system. This mechanism will significantly simplify performing activities in various types of applications - from pizza ordering systems to handling official matters online.

Explore Sherlock platform

Discover a fully managed Generative AI platform with OpenAI-compatible endpoints, enabling seamless integration of advanced AI capabilities into your applications. Access high-performing language models through a unified API, eliminating the complexity of infrastructure management and model operations.

How to use CloudFerro AI Hub Sherlock

by Mateusz Ślaski, Sales Support Engineer, and Jan Szypulski, Product Manager, CloudFerro

Introduction

CloudFerro Sherlock service is a managed Generative AI platform designed to empower organizations with advanced artificial intelligence capabilities without the complexity of managing infrastructure. Here are the key features and benefits of the Sherlock service:

- Sherlock offers access to a curated selection of cutting-edge language models, including models such as:

- Llama 3.1 and 3.3

- Mistral AI

- Bielik

- PLLuM

- DeepSeek

- The platform provides OpenAI-compatible endpoints, allowing seamless integration with existing solutions by simply replacing a few lines of code in the OpenAI library.

- Sherlock prioritizes data security and privacy, operating within a Polish data center. It adheres to a strict no-training policy, meaning user data is not stored or used for model training. The CloudFerro platform is ISO 27001 and ISO 9001 certified, ensuring high standards of security and quality management.

- The service is built on enterprise-ready infrastructure, eliminating the need for organizations to invest in and manage their own IT infrastructure. It utilizes powerful GPU processors for high-performance computing.

- With Sherlock Your organization can quickly integrate advanced AI models into your applications without complex technical overhead.

- Sherlock offers a usage-based billing model with a pay-per-token pricing structure, providing granular cost control and transparent pricing.

- The platform is part of a larger Cloud services ecosystem consisting of compute, storage and access to the Earth Observation data repository. All hosted and managed in the EU.

Overall, CloudFerro AI platform is designed to support businesses in leveraging AI for innovation while ensuring high security and privacy standards.

Sherlock platform has brief but essential documentation available at https://docs.sherlock.cloudferro.com/docs/introduction/.

General CloudFerro cloud documentation is available at https://docs.cloudferro.com/en/latest/index.html.

Agenda

In this guide you will get a list of ready-to-use applications providing GUI for LLMs, and then you will learn how to:

- register at CloudFerro cloud

- set up CF Sherlock AI Project

- generate API Key

- connect selected desktop AI GUI application to Sherlock

Selecting LLM desktop GUI applications

To communicate with LLM you may use various desktop applications.

Here is the list of some selected.

- Ollama: Features: open source, allows downloading, managing, and running LLMs locally. Offers both command-line and graphical interfaces, compatible with macOS, Linux, and Windows.

- AnythingLLM: Features: open source, multi-modal support, integrates with various LLMs and vector databases. Offers multi-user support, customizable chat widgets, and supports multiple document formats.

- Jan: Features: open source, allows running models like Mistral and Llama offline. Supports importing models from Hugging Face and accessing models via OpenAI compatible API.

- LM Studio: Features: Simple interface for finding, downloading, and running models from Hugging Face. Mimics OpenAI’s API for easy integration.

With some research you can find other similar applications and with some analysis taking into consideration your business needs you will choose the one.

For the purpose of this guide, we choose Jan application as it is Open Source software, it has versions for MacOS as Linux and for Windows. Additionally, and they state that are focused on privacy.

Registering in CloudFerro cloud

To use Sherlock’s API, you need to be an admin user of an Organization registered at the CloudFerro Cloud.

A detailed process of registration is described in this article Registration and Setting up an Account.

Remember to set up your organization, as well. You can read how to do it in the article Adding and editing Organization.

Once you are ready, you will be able to create your first project.

Project creation

To generate API key go to Sherlock Control Panel.

You will see empty "List of projects".

Fill the text entry box below “Create new project” label with a name and press the button “Create”.

Authentication

Sherlock by CloudFerro uses API keys for authentication. You can create API keys within the created project in your Control Panel.

To generate API key, go to Sherlock Control Panel.

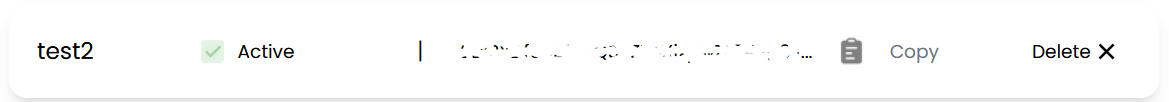

You should see the project created in the previous step:

Click “Details” to open a view for the selected project.

In Sherlock management panel, the API_KEY is called “service key”. Please scroll the view down. You will see the section dedicated to creating keys.

Enter a name for your key and press “Create” button.

You will get the following panel.

Click “Copy” to get key value.

Than save it in a secure location as Password Manager application or service.

Remember that your API key is secret! Do not share it with others or expose it in any client-side code (browsers, apps). Production requests must be routed through your own backend server where your API key can be securely loaded from an environment variable or key management service.

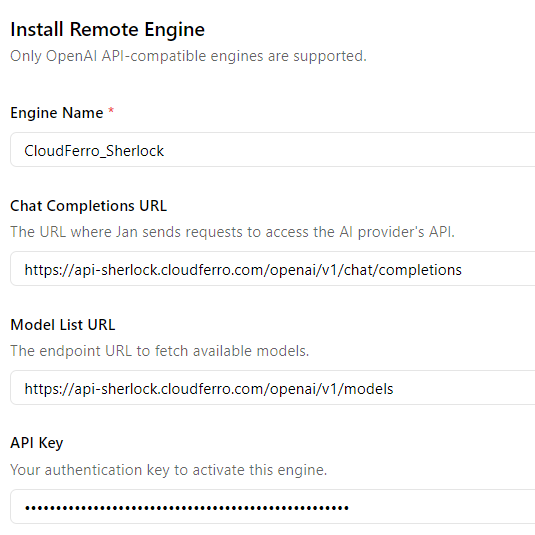

Finding endpoints that will be used in Chat GUI application

Please open Sherlock documentation Models Endpoint. You will see example Python code and example CURL execution.

Please note the following url: https://api-sherlock.cloudferro.com/openai/v1/models

Please open Sherlock documentation Chat Completion Endpoint.

You will see example Python code and example CURL execution.

In this case CURL execution contains the exact link that we are interested in. Url to note is: https://api-sherlock.cloudferro.com/openai/v1/chat/completions

GUI installation

Go to Jan application homepage and download version for your platform. Then, follow the platform related instructions.

When application starts, you will see the window:

To use CloudFerro Sherlock in Jan, you must install a remote engine. Select the hamburger menu just beside the hand icon “☰” then select “Jan” and “Settings”.

Select “Engines” on the left side bar.

Then press “+ Install Engine” button.

You will see the following window:

On this screenshot there are all fields with the data collected in the previous steps:

Scroll the window to the bottom and press “Install button”.

Click speech bubble icon “🗩” on the left side. You will see the “New Thread” window.

Click “Select a model” at the bottom.

You will see CloudFerro Sherlock.

Expand the available models list by clicking“˅” arrow.

Select the model that you want to use.

For this guide, I selected Llama-3.3.

Type your prompt in the field titled “Ask me anything”.

For example, if you enter:

Generate Python "Hello World!" program.

you will receive an answer with the corresponding code:

When you go to Sherlock Billing summary, you will see tokens spent on your conversations.

Summary and further steps

You are now able to set up and use the CloudFerro Sherlock platform with the GUI application (Jan or others after adapting this procedure). You can test hosted models with your data without worrying about its privacy.

In the Sherlock documentation you will find more examples, especially how to use the models with Python code and integrate them with your applications.

Happy prompting!

Explore Sherlock platform

Discover a fully managed Generative AI platform with OpenAI-compatible endpoints, enabling seamless integration of advanced AI capabilities into your applications. Access high-performing language models through a unified API, eliminating the complexity of infrastructure management and model operations.

How to run OpenVPN from Terraform Code

by Mateusz Ślaski, Sales Support Engineer, CloudFerro

The following article covers the subject of creating an OpenVPN instance allowing secure access to the OpenStack network through a VPN tunnel.

We will build step-by-step code containing templates for:

- network for our environment

- necessary security group with rules

- virtual machine instance with automatically configured VPN

- dedicated Object Storage for VPN configuration persistence

Instructions and the way of executing are defined in a way that allows you to learn, by the way, some Terraform and OpenStack functions such as:

- splitting TF code into multiple files

- using TF Workspaces

- using TF templates

- launching instances configured with Cloud-Init as TF and OpenStack "user-data"

Prerequisites / Preparation

Before you start, please read the documents:

- Generating and Authorizing Terraform using a Keycloak User on CREODIAS

- How to Generate or Use Application Credentials via CLI on CREODIAS: We will use them to authenticate the Terraform OpenStack provider.

- Additionally, you may review:

You may also, if necessary, refresh some details about the manual management of: projects, key-pairs, networks, and security groups:

- https://creodias.docs.cloudferro.com/en/latest/networking/Generating-a-SSH-keypair-in-Linux-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-create-key-pair-in-OpenStack-Dashboard-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/networking/How-to-Import-SSH-Public-Key-to-OpenStack-Horizon-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-use-Security-Groups-in-Horizon-on-Creodias.html

- https://creodias.docs.cloudferro.com/en/latest/networking/How-to-create-a-network-with-router-in-Horizon-Dashboard-on-Creodias.html

Step 1 - Select or create project

You may use the default project in your tenant (usually named "cloud_aaaaa_bb") or create a new one by following the document mentioned below.

Step 2 - Install Terraform

There are various ways to install Terraform, some of which are described in the documentation mentioned in the "Preparation" chapter.

If you are using Ubuntu 22.04 LTS or newer and you do not need the latest Terraform release (for the Terraform OpenStack provider, it is not necessary), the easiest way is to use Snap.

First, install Snap

sudo apt install snapdThen install Terraform

sudo snap install terraform --classicStep 3 - Allowing access to project from Terraform

Now create Application Credentials.

Please follow the mentioned document: "How to Generate or Use Application Credentials via CLI on CREODIAS": https://creodias.docs.cloudferro.com/en/latest/cloud/How-to-generate-or-use-Application-Credentials-via-CLI-on-Creodias.html

When you have them ready, save them in a secure location (i.e., password manager) and fill in the variables in the "my_first_vpn.tfvars" file.

Step 4 - Prepare configuration files

As Terraform operates on the entire directory and automatically merges all "*.tf" files into one codebase, we may split our Terraform code into a few files to manage the code more easily.

- main.tf

- variables.tf

- resources.tf

Additionally, we need two other files:

- open_vpn_user_data.yaml

- my_first_vpn.tfvars

File 1 - main.tf

In this file, we keep the main definitions for Terraform and the OpenStack provider.

terraform {

required_version = ">= 0.14.0"

required_providers {

openstack = {

source = "terraform-provider-openstack/openstack"

version = "~> 1.51.1"

}

}

}

provider "openstack" {

auth_url = var.auth_url

region = var.region

user_name = "${var.os_user_name}"

application_credential_id = "${var.os_application_credential_id}"

application_credential_secret = "${var.os_application_credential_secret}"

}

data "openstack_networking_router_v2" "external_router" {

name = "${var.tenant_project_name}"

}File 2 - variables.tf

In this file, we will keep variable definitions.

# Section provideing data necessary to connect and authenticate to OpenStack

variable os_user_name {

type = string

}

variable tenant_project_name {

type = string

}

variable os_application_credential_id {

type = string

}

variable os_application_credential_secret {

type = string

}

variable "auth_url" {

type = string

default = "https://keystone.cloudferro.com:5000"

}

variable "region" {

type = string

validation {

condition = contains(["WAW3-1", "WAW3-2", "FRA1", "FRA1-2", "WAW4-1"], var.region)

error_message = "Proper region names are: WAW3-1, WAW3-2, FRA1, FRA1-2, WAW4-1"

}

}

#Our friendly name for entire environment.

variable "env_id" {

type = string

}

# Key-pair created in previous steps

variable env_keypair {

type = string

}

variable internal_network {

type = string

default = "192.168.11.0"

validation {

condition = can(regex("^(10\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])|192\\.168\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9]))$", var.internal_network))

error_message = "Provide proper network address for class 10.a.b.c or 192.168.a.b"

}

}

variable internal_netmask {

type = string

default = "/24"

validation {

condition = can(regex("^\\/(1[6-9]|2[0-4])$", var.internal_netmask))

error_message = "Please use mask size from /16 to /24."

}

}

variable external_network {

type = string

default = "10.8.0.0"

validation {

condition = can(regex("^(10\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])|192\\.168\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9])\\.(?:25[0-5]|2[0-4][0-9]|1[0-9]{2}|[1-9]?[0-9]))$", var.external_network))

error_message = "Provide proper network address for class 10.a.b.c or 192.168.a.b"

}

}

variable "vpn_image" {

type = string

default = "Ubuntu 22.04 LTS"

}

variable "vpn_version" {

type = string

}

variable "vpn_flavor" {

type = string

default = "eo2a.xlarge"

}

variable cert_country {

type = string

}

variable cert_province {

type = string

}

variable cert_city {

type = string

}

variable cert_org {

type = string

}

variable cert_email {

type = string

}

variable cert_orgunit {

type = string

}File 3 - resources.tf

This is the most significant file where definitions of all entities and resources are stored.

resource "random_password" "password" {

length = 24

special = true

min_upper = 8

min_lower = 8

min_numeric = 6

min_special = 2

override_special = "-"

keepers = {

tenant = var.tenant_project_name

}

}

resource "openstack_identity_ec2_credential_v3" "object_storage_ec2_key" {

region = var.region

}

resource "openstack_objectstorage_container_v1" "backup_repo" {

name = "${var.env_id}-vpnaac-backup"

region = var.region

}

resource "openstack_networking_secgroup_v2" "sg_openvpn" {

name = "${var.env_id}-sg-openvpn"

description = "OpenVPN UDP port"

}

resource "openstack_networking_secgroup_rule_v2" "sg_openvpn_rule_1" {

direction = "ingress"

ethertype = "IPv4"

protocol = "udp"

port_range_min = 1194

port_range_max = 1194

remote_ip_prefix = "0.0.0.0/0"

security_group_id = openstack_networking_secgroup_v2.sg_openvpn.id

}

data "openstack_networking_router_v2" "project-external-router" {

name = "${var.tenant_project_name}"

}

resource "openstack_networking_network_v2" "env_net" {

name = "${var.env_id}-net"

}

resource "openstack_networking_subnet_v2" "env_net_subnet" {

name = "${var.env_id}-net-subnet"

network_id = openstack_networking_network_v2.env_net.id

cidr = "${var.internal_network}${var.internal_netmask}"

gateway_ip = cidrhost("${var.internal_network}${var.internal_netmask}", 1)

ip_version = 4

enable_dhcp = true

}

resource "openstack_networking_router_interface_v2" "router_interface_external" {

router_id = data.openstack_networking_router_v2.external_router.id

subnet_id = openstack_networking_subnet_v2.env_net_subnet.id

}

resource "openstack_networking_floatingip_v2" "vpn_public_ip" {

pool = "external"

}

resource "openstack_compute_instance_v2" "vpn_server" {

name = "${var.env_id}-vpn-server"

image_name = "Ubuntu 22.04 LTS"

flavor_name = var.vpn_flavor

security_groups = [

"default",

"allow_ping_ssh_icmp_rdp",

openstack_networking_secgroup_v2.sg_openvpn.name

]

key_pair = var.env_keypair

depends_on = [

openstack_networking_subnet_v2.env_net_subnet

]

user_data = "${templatefile("./vpn_user_data.yaml",

{

env_id = "${var.env_id}"

region_name = "${var.region}"

archive_url = "${join("", ["https://s3.", lower(var.region), ".cloudferro.com"])}"

archive_name = "${openstack_objectstorage_container_v1.backup_repo.name}"

archive_access = "${openstack_identity_ec2_credential_v3.object_storage_ec2_key.access}"

archive_secret = "${openstack_identity_ec2_credential_v3.object_storage_ec2_key.secret}"

vpn_version = "${var.vpn_version}"

vpn_net_external = "${var.external_network}"

vpn_net_internal = "${var.internal_network}"

vpn_net_internal_mask = "${cidrnetmask("${var.internal_network}${var.internal_netmask}")}"

vpn_public_ip = "${openstack_networking_floatingip_v2.vpn_public_ip.address}"

cert_pass = "${random_password.password.result}"

cert_country = "${var.cert_country}"

cert_province = "${var.cert_province}"

cert_city = "${var.cert_city}"

cert_org = "${var.cert_org}"

cert_email = "${var.cert_email}"

cert_orgunit = "${var.cert_orgunit}"

}

)}"

network {

uuid = openstack_networking_network_v2.env_net.id

fixed_ip_v4 = cidrhost("${var.internal_network}${var.internal_netmask}", 3)

}

}

resource "openstack_compute_floatingip_associate_v2" "vpn_ip_associate" {

floating_ip = openstack_networking_floatingip_v2.vpn_public_ip.address

instance_id = openstack_compute_instance_v2.vpn_server.id

}File 4 - vpn_user_data.yaml

This is a template of user-data that would be injected into our VPN instance. This file contains configuration and package installation directives and a script responsible for VPN configuration.

#cloud-config

package_update: true

package_upgrade: true

packages:

- openssh-server

- openvpn

- easy-rsa

- iptables

write_files:

- path: /run/scripts/prepare_vpn

permissions: '0700'

content: |

#!/bin/bash

echo "${archive_access}:${archive_secret}" > /home/eouser/.passwd-s3fs-archive

chmod 600 /home/eouser/.passwd-s3fs-archive

REPO_NAME=${archive_name}

if ! [[ -z "$REPO_NAME" ]]

then

mkdir /mnt/archive

echo "/usr/local/bin/s3fs#$REPO_NAME /mnt/archive fuse passwd_file=/home/eouser/.passwd-s3fs-repo,_netdev,allow_other,use_path_request_style,uid=0,umask=0000,mp_umask=0000,gid=0,url=${archive_url},endpoint=default 0 0" >> /etc/fstab

mount /mnt/repo

fi

ENV_ID="${env_id}"

CLIENT_NAME="client-$ENV_ID"

VPN_BACKUP=/mnt/archive/openvpn-backup-$ENV_ID.tar

VPN_VERSION=`cat /mnt/archive/$ENV_ID-vpn-version`

if [[ -f $VPN_BACKUP ]] && [[ "$VPN_VERSION" = "${vpn_version}" ]]

then

tar xf $VPN_BACKUP -C /etc openvpn

tar xf $VPN_BACKUP -C /home/eouser $CLIENT_NAME.ovpn

else

# ---- Server cerificates preparation

make-cadir /etc/openvpn/$ENV_ID-easy-rsa